Substack Post: How My AI-Free Commitment Is Going

My AI Free Commitment Challenge -not originally intended for Substack.

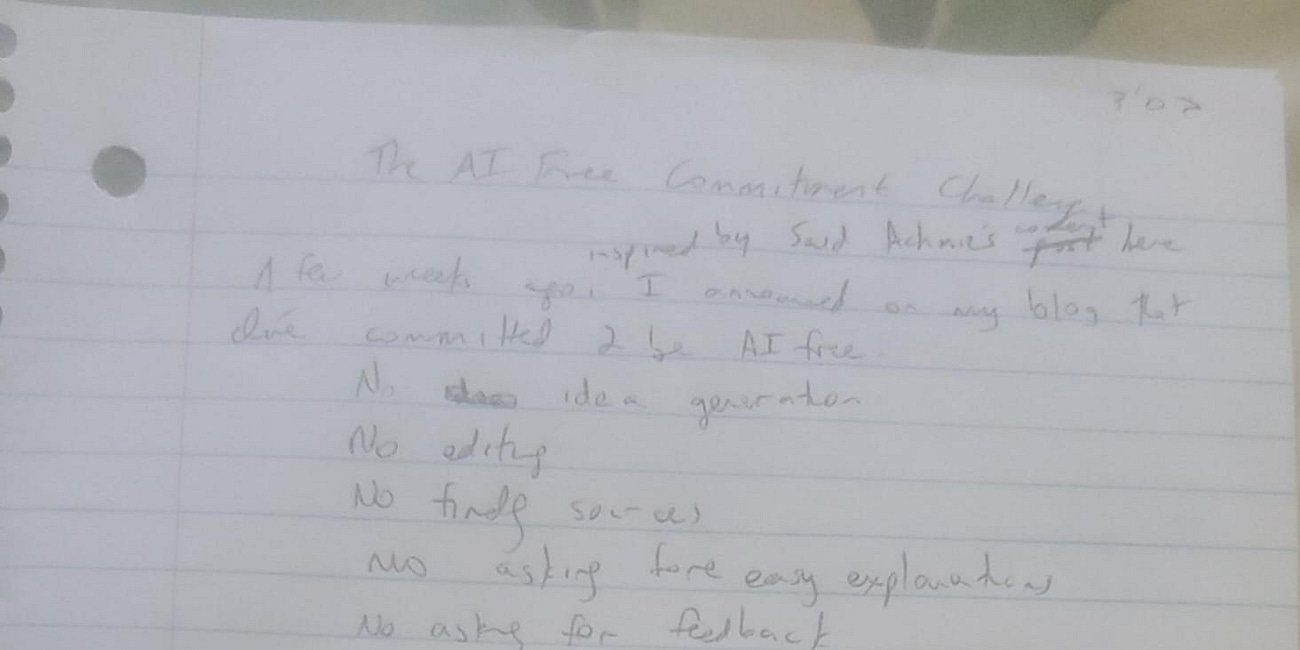

This post is truly old school. It was handwritten into an old notebook, because in order to have the time to write it, I had to give my children my cell phone so that they would watch Paw Patrol and let me write. Images below are the pages as they were originally written in my own handwriting, pen on paper, a relic of antiquity. This was originally posted on reddit, and edited for my substack. Please do not click on this post.

How My AI Free Commitment Challenge Is Going

Edit: thanks for linking this in Hacker news, but the link you actually want is

My AI Free Commitment Challenge

A few weeks ago, inspired by a comment I saw online, I announced on my Substack that I had committed to be AI free from now on. I drew up some rules. See the original rules here: Link

From now on, Isha Yiras Hashem is officially AI-free. I have never used AI much, but I'd like to emphasize and clarify this now. All writing, jokes, images, and sources will be humanly flawed and locally sourced, so do the kind human thing and let me know if I made a mistake.

I intended this to cover:

No idea generation

no editing, so no Lex use (lex is an AI powered word processor)

no finding sources

no asking for easy explanations

no asking for feedback

This has been a surprisingly hard. I had originally allowed for exceptions for translation, but I found that it was too easy to slip from translating to asking questions. I am also an extrovert, and artificial intelligence had been providing valuable feedback, so I didn't have to annoy everyone who knows me in real life, and maybe also a few people who don't know me, but seem like they might be fun to correspond with.

Hoped-for and Actual Positive Consequences:

My natural style and preference is fluid human anyway. I like human generated content, even with flaws, and I don't like how the internet starts to feel more artificial by the day. I don't want to be a part of that. Isha Yiras Hashem always wants to be part of a solution and not the cause of a problem. I like real people, and I want to be a real person.

I'm a consequentialist, not a utilitarian. I'm not good at philosophy, but I'm trying to figure it out, and it seems that consequentialist abstention from AI would be a consistent intellectual position for me to take, and I'm all about being firm and consistent, just ask my kids, who are definitely not watching Paw Patrol.1

It is a challenge. If I, a stay at home mother find it this hard to stop using AI to write a packing list, imagine how much harder it would be for people who need it for their jobs. Being hard isn't a reason to give up. I'm not scared of doing hard things. I have given birth multiple times. No one expects me to know anything about AI anyway, even if I did write AI for Dummies Like Me (Link)[https://ishayirashashem.substack.com/p/artificial-intelligence-for-dummies]

I'll be the first person to write this style of post, which has to count for something, maybe an entry into the Guinness Book of World Records.

Negative Consequences I Have Experienced So Far

So far, my experience not using AI has been practically very negative. I have not seen anyone else on the internet becoming more human as a result. I'm not even sure I did the whole consequentialist abstention thing right or if it makes any philosophical sense. My writing is less impressive. But I did do something hard, which I'm proud of. At any rate, here are some of the negatives I have experienced.

Firstly, it made my writing take longer. Pre-AI, I used to spend a lot of time doing dumb things, like checking how to make a link work on Reddit. (I can never remember if it's the rounded things or the square things on the side, and which one is supposed to have the link and which is supposed to have the text, and what the order is.) Chat GPT saved me a lot of time doing this sort of activity.

But idea and language generation is not my writing weakness, and it might be speeding this up is actually counter productive for me. I'm a naturally a flighty thinker, and being forced to slow down and check punctuation and spelling and how to do links greatly benefits my writing and my written communication. Often, while checking small details, I will notice larger, important details missed earlier. In general, I'm more interested in communicating well and clearly done and communicating a lot.

Secondly, I've noticed people get more irritated now when I ask questions or say things they think could have been more easily done using chatgpt. It's the new “let me Google that for you”. Everyone else on the internet is an introvert and they don't want to say one more sentence than is absolutely necessary or be any friendlier than they absolutely have to be, and they definitely don’t want to waste their precious time responding to my questions when they could be spending valuable time gaming or whatever.

Thirdly, well, I was going to write that it takes l me longer to write things. I thought this was true, but upon typing this up, I'm not so sure. While chat GPT can generate a lot of words, I end up spending so much time editing them that I may as well have written it by hand, and the result still still doesn't feel like me.

Besides, it may not even be true. I started writing this post at around 7 am this morning. I got all the kids dressed and off to school. And it will be on rTddit by 9:30. Granted, I'm not making images on Canva and I'm not translating anything, but still. So maybe I should count this as ‘to be determined’.

Fourthly, without chatgpt editing, I seem less sophisticated online, which means that smart people are less likely to respond to my comments. Social signaling is a thing. The reality is that I'm not sophisticated, so I'm just communicating a true fact here, if inadvertently. I'll have to work on my self-acceptance and maybe on my sophistication, although that's very unlikely to happen without a patient human editor.

Finally, I might (G-d forbid!) lose an internet argument once in a while. This is okay, right? Like sometimes I'm going to be wrong or the other person is going to out-argue me. I don't actually have to change my mind, even if I lose the argument. At least not immediately.

Conclusion

Now, as the real human Isha Yiras Hashem, I am morally obligated to conclude with my characteristic Biblical tie-in. The end of Ecclesiastes is “because everything man does will be judged, if its good or if its bad.” Traditionally we repeat the second to last verse so as not to end the reading with the word “bad”. It also happens to be my favorite Bible verse of all time.

“At the end, everything is heard. Fear G-d and guard his Commandments, for this is all of man.”

What makes us human? Perhaps this is what makes us human. It certainly seems easier to convince people of than checking a box verifying we are human or identifying objects with wheels or typing. And maybe the more you fear G-d, now that's superhuman intelligence.

Please comment with your thoughts. Especially if you want to see more human content and want to encourage me to stick to my commitment!

So there you have it. I am curious what you think. Is anyone else trying to go AI free? What have your experiences been so far? Do you think I should go back to using AI?

After they are done with Paw Patrol.

I have this thought about AI usage (and new technologies in general), which is that when they're brand-new, there's this "shiny stage" where people play with them, and it's PLAY, and it's great. But then over time, what was once novel becomes ordinary, and we fall into ruts where we're just kind of mindlessly using that technology (whether we need it or not) because we can, and we become its prisoner, and Using The Thing is grim drudgery.

On the other hand, I keep telling myself I'm going to use AI _MORE_, but I gave myself this one requirement... that I'm going to be "in a place" where I'm really giving thought to each ChatGPT dialogue I'm having and not just spewing random questions, but trying to somehow (?) be intentional about my inputs. It turns out that giving myself that requirement + mostly only using browsers on a computer + whatever other habits & tendencies are relevant results in... "not much AI usage."

(Technically, my firrrrst thought of something I might say in response to my post... was an idea i had when I mis-read "identifying objects with wheels" as "identifying which objects are wheels..." and I was like "hmmm, wheels or Biblical visions of angels? Sometimes those are surprisingly hard to distinguish!")